mirror of

https://github.com/ElementsProject/lightning.git

synced 2025-03-15 20:09:18 +01:00

doc: Add guides and GitHub workflow for doc sync

This PR: - adds all the guides (in markdown format) that is published at https://docs.corelightning.org/docs - adds a github workflow to sync any future changes made to files inside the guides folder - does not include API reference (json-rpc commands). Those will be handled in a separate PR since they're used as manpages and will require a different github workflow Note that the guides do not exactly map to their related files in doc/, since we reorganized the overall documentation structure on readme for better readability and developer experience. For example, doc/FUZZING.md and doc/HACKING.md#Testing are merged into testing.md in the new docs. As on the creation date of this PR, content from each of the legacy documents has been synced with the new docs. Until this PR gets merged, I will continue to push any updates made to the legacy documents into the new docs. If this looks reasonable, I will add a separate PR to clean up the legacy documents from doc/ (or mark them deprecated) to avoid redundant upkeep and maintenance. Changelog-None

This commit is contained in:

parent

15e86f2bba

commit

e83782f5de

44 changed files with 5823 additions and 0 deletions

23

.github/workflows/rdme-docs-sync.yml

vendored

Normal file

23

.github/workflows/rdme-docs-sync.yml

vendored

Normal file

|

|

@ -0,0 +1,23 @@

|

|||

# This GitHub Actions workflow was auto-generated by the `rdme` cli on 2023-04-22T13:16:28.430Z

|

||||

# You can view our full documentation here: https://docs.readme.com/docs/rdme

|

||||

name: ReadMe GitHub Action 🦉

|

||||

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

# This workflow will run every time you push code to the following branch: `master`

|

||||

# Check out GitHub's docs for more info on configuring this:

|

||||

# https://docs.github.com/actions/using-workflows/events-that-trigger-workflows

|

||||

- master

|

||||

|

||||

jobs:

|

||||

rdme-docs:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Check out repo 📚

|

||||

uses: actions/checkout@v3

|

||||

|

||||

- name: Run `docs` command 🚀

|

||||

uses: readmeio/rdme@v8

|

||||

with:

|

||||

rdme: docs guides/ --key=${{ secrets.README_API_KEY }} --version=23.02

|

||||

438

doc/guides/Beginner-s Guide/backup-and-recovery.md

Normal file

438

doc/guides/Beginner-s Guide/backup-and-recovery.md

Normal file

|

|

@ -0,0 +1,438 @@

|

|||

---

|

||||

title: "Backup and recovery"

|

||||

slug: "backup-and-recovery"

|

||||

excerpt: "Learn the various backup and recovery options available for your Core Lightning node."

|

||||

hidden: false

|

||||

createdAt: "2022-11-18T16:28:17.292Z"

|

||||

updatedAt: "2023-04-22T12:51:49.775Z"

|

||||

---

|

||||

Lightning Network channels get their scalability and privacy benefits from the very simple technique of _not telling anyone else about your in-channel activity_.

|

||||

This is in contrast to onchain payments, where you have to tell everyone about each and every payment and have it recorded on the blockchain, leading to scaling problems (you have to push data to everyone, everyone needs to validate every transaction) and privacy problems (everyone knows every payment you were ever involved in).

|

||||

|

||||

Unfortunately, this removes a property that onchain users are so used to, they react in surprise when learning about this removal.

|

||||

Your onchain activity is recorded in all archival fullnodes, so if you forget all your onchain activity because your storage got fried, you just go redownload the activity from the nearest archival full node.

|

||||

|

||||

But in Lightning, since _you_ are the only one storing all your financial information, you **_cannot_** recover this financial information from anywhere else.

|

||||

|

||||

This means that on Lightning, **you have to** responsibly back up your financial information yourself, using various processes and automation.

|

||||

|

||||

The discussion below assumes that you know where you put your `$LIGHTNINGDIR`, and you know the directory structure within. By default your `$LIGHTNINGDIR` will be in `~/.lightning/${COIN}`. For example, if you are running `--mainnet`, it will be

|

||||

`~/.lightning/bitcoin`.

|

||||

|

||||

## `hsm_secret`

|

||||

|

||||

> 📘 Who should do this:

|

||||

>

|

||||

> Everyone.

|

||||

|

||||

You need a copy of the `hsm_secret` file regardless of whatever backup strategy you use.

|

||||

|

||||

The `hsm_secret` is created when you first create the node, and does not change.

|

||||

Thus, a one-time backup of `hsm_secret` is sufficient.

|

||||

|

||||

This is just 32 bytes, and you can do something like the below and write the hexadecimal digits a few times on a piece of paper:

|

||||

|

||||

```shell

|

||||

cd $LIGHTNINGDIR

|

||||

xxd hsm_secret

|

||||

```

|

||||

|

||||

|

||||

|

||||

You can re-enter the hexdump into a text file later and use `xxd` to convert it back to a binary `hsm_secret`:

|

||||

|

||||

```

|

||||

cat > hsm_secret_hex.txt <<HEX

|

||||

00: 30cc f221 94e1 7f01 cd54 d68c a1ba f124

|

||||

10: e1f3 1d45 d904 823c 77b7 1e18 fd93 1676

|

||||

HEX

|

||||

xxd -r hsm_secret_hex.txt > hsm_secret

|

||||

chmod 0400 hsm_secret

|

||||

```

|

||||

|

||||

|

||||

|

||||

Notice that you need to ensure that the `hsm_secret` is only readable by the user, and is not writable, as otherwise `lightningd` will refuse to start. Hence the `chmod 0400 hsm_secret` command.

|

||||

|

||||

Alternatively, if you are deploying a new node that has no funds and channels yet, you can generate BIP39 words using any process, and create the `hsm_secret` using the `hsmtool generatehsm` command.

|

||||

If you did `make install` then `hsmtool` is installed as [`lightning-hsmtool`](ref:lightning-hsmtool), else you can find it in the `tools/` directory of the build directory.

|

||||

|

||||

```shell

|

||||

lightning-hsmtool generatehsm hsm_secret

|

||||

```

|

||||

|

||||

|

||||

|

||||

Then enter the BIP39 words, plus an optional passphrase. Then copy the `hsm_secret` to `${LIGHTNINGDIR}`

|

||||

|

||||

You can regenerate the same `hsm_secret` file using the same BIP39 words, which again, you can back up on paper.

|

||||

|

||||

Recovery of the `hsm_secret` is sufficient to recover any onchain funds.

|

||||

Recovery of the `hsm_secret` is necessary, but insufficient, to recover any in-channel funds.

|

||||

To recover in-channel funds, you need to use one or more of the other backup strategies below.

|

||||

|

||||

## SQLITE3 `--wallet=${main}:${backup}` And Remote NFS Mount

|

||||

|

||||

> 📘 Who should do this:

|

||||

>

|

||||

> Casual users.

|

||||

|

||||

> 🚧

|

||||

>

|

||||

> This technique is only supported after the version v0.10.2 (not included) or later.

|

||||

>

|

||||

> On earlier versions, the `:` character is not special and will be considered part of the path of the database file.

|

||||

|

||||

When using the SQLITE3 backend (the default), you can specify a second database file to replicate to, by separating the second file with a single `:` character in the `--wallet` option, after the main database filename.

|

||||

|

||||

For example, if the user running `lightningd` is named `user`, and you are on the Bitcoin mainnet with the default `${LIGHTNINGDIR}`, you can specify in your `config` file:

|

||||

|

||||

```shell

|

||||

wallet=sqlite3:///home/user/.lightning/bitcoin/lightningd.sqlite3:/my/backup/lightningd.sqlite3

|

||||

```

|

||||

|

||||

|

||||

|

||||

Or via command line:

|

||||

|

||||

```

|

||||

lightningd --wallet=sqlite3:///home/user/.lightning/bitcoin/lightningd.sqlite3:/my/backup/lightningd.sqlite3

|

||||

```

|

||||

|

||||

|

||||

|

||||

If the second database file does not exist but the directory that would contain it does exist, the file is created.

|

||||

If the directory of the second database file does not exist, `lightningd` will fail at startup.

|

||||

If the second database file already exists, on startup it will be overwritten with the main database.

|

||||

During operation, all database updates will be done on both databases.

|

||||

|

||||

The main and backup files will **not** be identical at every byte, but they will still contain the same data.

|

||||

|

||||

It is recommended that you use **the same filename** for both files, just on different directories.

|

||||

|

||||

This has the advantage compared to the `backup` plugin below of requiring exactly the same amount of space on both the main and backup storage. The `backup` plugin will take more space on the backup than on the main storage.

|

||||

It has the disadvantage that it will only work with the SQLITE3 backend and is not supported by the PostgreSQL backend, and is unlikely to be supported on any future database backends.

|

||||

|

||||

You can only specify _one_ replica.

|

||||

|

||||

It is recommended that you use a network-mounted filesystem for the backup destination.

|

||||

For example, if you have a NAS you can access remotely.

|

||||

|

||||

At the minimum, set the backup to a different storage device.

|

||||

This is no better than just using RAID-1 (and the RAID-1 will probably be faster) but this is easier to set up --- just plug in a commodity USB flash disk (with metal casing, since a lot of writes are done and you need to dissipate the heat quickly) and use it as the backup location, without

|

||||

repartitioning your OS disk, for example.

|

||||

|

||||

> 📘

|

||||

>

|

||||

> Do note that files are not stored encrypted, so you should really not do this with rented space ("cloud storage").

|

||||

|

||||

To recover, simply get **all** the backup database files.

|

||||

Note that SQLITE3 will sometimes create a `-journal` or `-wal` file, which is necessary to ensure correct recovery of the backup; you need to copy those too, with corresponding renames if you use a different filename for the backup database, e.g. if you named the backup `backup.sqlite3` and when you recover you find `backup.sqlite3` and `backup.sqlite3-journal` files, you rename `backup.sqlite3` to `lightningd.sqlite3` and

|

||||

`backup.sqlite3-journal` to `lightningd.sqlite3-journal`.

|

||||

Note that the `-journal` or `-wal` file may or may not exist, but if they _do_, you _must_ recover them as well (there can be an `-shm` file as well in WAL mode, but it is unnecessary;

|

||||

it is only used by SQLITE3 as a hack for portable shared memory, and contains no useful data; SQLITE3 will ignore its contents always).

|

||||

It is recommended that you use **the same filename** for both main and backup databases (just on different directories), and put the backup in its own directory, so that you can just recover all the files in that directory without worrying about missing any needed files or correctly

|

||||

renaming.

|

||||

|

||||

If your backup destination is a network-mounted filesystem that is in a remote location, then even loss of all hardware in one location will allow you to still recover your Lightning funds.

|

||||

|

||||

However, if instead you are just replicating the database on another storage device in a single location, you remain vulnerable to disasters like fire or computer confiscation.

|

||||

|

||||

## `backup` Plugin And Remote NFS Mount

|

||||

|

||||

> 📘 Who should do this:

|

||||

>

|

||||

> Casual users.

|

||||

|

||||

You can find the full source for the `backup` plugin here:

|

||||

<https://github.com/lightningd/plugins/tree/master/backup>

|

||||

|

||||

The `backup` plugin requires Python 3.

|

||||

|

||||

- Download the source for the plugin.

|

||||

- `git clone https://github.com/lightningd/plugins.git`

|

||||

- `cd` into its directory and install requirements.

|

||||

- `cd plugins/backup`

|

||||

- `pip3 install -r requirements.txt`

|

||||

- Figure out where you will put the backup files.

|

||||

- Ideally you have an NFS or other network-based mount on your system, into which you will put the backup.

|

||||

- Stop your Lightning node.

|

||||

- `/path/to/backup-cli init --lightning-dir ${LIGHTNINGDIR} file:///path/to/nfs/mount/file.bkp`.

|

||||

This creates an initial copy of the database at the NFS mount.

|

||||

- Add these settings to your `lightningd` configuration:

|

||||

- `important-plugin=/path/to/backup.py`

|

||||

- Restart your Lightning node.

|

||||

|

||||

It is recommended that you use a network-mounted filesystem for the backup destination.

|

||||

For example, if you have a NAS you can access remotely.

|

||||

|

||||

> 📘

|

||||

>

|

||||

> Do note that files are not stored encrypted, so you should really not do this with rented space ("cloud storage").

|

||||

|

||||

Alternately, you _could_ put it in another storage device (e.g. USB flash disk) in the same physical location.

|

||||

|

||||

To recover:

|

||||

|

||||

- Re-download the `backup` plugin and install Python 3 and the

|

||||

requirements of `backup`.

|

||||

- `/path/to/backup-cli restore file:///path/to/nfs/mount ${LIGHTNINGDIR}`

|

||||

|

||||

If your backup destination is a network-mounted filesystem that is in a remote location, then even loss of all hardware in one location will allow you to still recover your Lightning funds.

|

||||

|

||||

However, if instead you are just replicating the database on another storage device in a single location, you remain vulnerable to disasters like fire or computer confiscation.

|

||||

|

||||

## Filesystem Redundancy

|

||||

|

||||

> 📘 Who should do this:

|

||||

>

|

||||

> Filesystem nerds, data hoarders, home labs, enterprise users.

|

||||

|

||||

You can set up a RAID-1 with multiple storage devices, and point the `$LIGHTNINGDIR` to the RAID-1 setup. That way, failure of one storage device will still let you recover funds.

|

||||

|

||||

You can use a hardware RAID-1 setup, or just buy multiple commodity storage media you can add to your machine and use a software RAID, such as (not an exhaustive list!):

|

||||

|

||||

- `mdadm` to create a virtual volume which is the RAID combination of multiple physical media.

|

||||

- BTRFS RAID-1 or RAID-10, a filesystem built into Linux.

|

||||

- ZFS RAID-Z, a filesystem that cannot be legally distributed with the Linux kernel, but can be distributed in a BSD system, and can be installed on Linux with some extra effort, see

|

||||

[ZFSonLinux](https://zfsonlinux.org).

|

||||

|

||||

RAID-1 (whether by hardware, or software) like the above protects against failure of a single storage device, but does not protect you in case of certain disasters, such as fire or computer confiscation.

|

||||

|

||||

You can "just" use a pair of high-quality metal-casing USB flash devices (you need metal-casing since the devices will have a lot of small writes, which will cause a lot of heating, which needs to dissipate very fast, otherwise the flash device firmware will internally disconnect the flash device from your computer, reducing your reliability) in RAID-1, if you have enough USB ports.

|

||||

|

||||

### Example: BTRFS on Linux

|

||||

|

||||

On a Linux system, one of the simpler things you can do would be to use BTRFS RAID-1 setup between a partition on your primary storage and a USB flash disk.

|

||||

|

||||

The below "should" work, but assumes you are comfortable with low-level Linux administration.

|

||||

If you are on a system that would make you cry if you break it, you **MUST** stop your Lightning node and back up all files before doing the below.

|

||||

|

||||

- Install `btrfs-progs` or `btrfs-tools` or equivalent.

|

||||

- Get a 32Gb USB flash disk.

|

||||

- Stop your Lightning node and back up everything, do not be stupid.

|

||||

- Repartition your hard disk to have a 30Gb partition.

|

||||

- This is risky and may lose your data, so this is best done with a brand-new hard disk that contains no data.

|

||||

- Connect the USB flash disk.

|

||||

- Find the `/dev/sdXX` devices for the HDD 30Gb partition and the flash disk.

|

||||

- `lsblk -o NAME,TYPE,SIZE,MODEL` should help.

|

||||

- Create a RAID-1 `btrfs` filesystem.

|

||||

- `mkfs.btrfs -m raid1 -d raid1 /dev/${HDD30GB} /dev/${USB32GB}`

|

||||

- You may need to add `-f` if the USB flash disk is already formatted.

|

||||

- Create a mountpoint for the `btrfs` filesystem.

|

||||

- Create a `/etc/fstab` entry.

|

||||

- Use the `UUID` option instad of `/dev/sdXX` since the exact device letter can change across boots.

|

||||

- You can get the UUID by `lsblk -o NAME,UUID`. Specifying _either_ of the devices is sufficient.

|

||||

- Add `autodefrag` option, which tends to work better with SQLITE3 databases.

|

||||

- e.g. `UUID=${UUID} ${BTRFSMOUNTPOINT} btrfs defaults,autodefrag 0 0`

|

||||

- `mount -a` then `df` to confirm it got mounted.

|

||||

- Copy the contents of the `$LIGHTNINGDIR` to the BTRFS mount point.

|

||||

- Copy the entire directory, then `chown -R` the copy to the user who will run the `lightningd`.

|

||||

- If you are paranoid, run `diff -r` on both copies to check.

|

||||

- Remove the existing `$LIGHTNINGDIR`.

|

||||

- `ln -s ${BTRFSMOUNTPOINT}/lightningdirname ${LIGHTNINGDIR}`.

|

||||

- Make sure the `$LIGHTNINGDIR` has the same structure as what you originally had.

|

||||

- Add `crontab` entries for `root` that perform regular `btrfs` maintenance tasks.

|

||||

- `0 0 * * * /usr/bin/btrfs balance start -dusage=50 -dlimit=2 -musage=50 -mlimit=4 ${BTRFSMOUNTPOINT}`

|

||||

This prevents BTRFS from running out of blocks even if it has unused space _within_ blocks, and is run at midnight everyday. You may need to change the path to the `btrfs` binary.

|

||||

- `0 0 * * 0 /usr/bin/btrfs scrub start -B -c 2 -n 4 ${BTRFSMOUNTPOINT}`

|

||||

This detects bit rot (i.e. bad sectors) and auto-heals the filesystem, and is run on Sundays at midnight.

|

||||

- Restart your Lightning node.

|

||||

|

||||

If one or the other device fails completely, shut down your computer, boot on a recovery disk or similar, then:

|

||||

|

||||

- Connect the surviving device.

|

||||

- Mount the partition/USB flash disk in `degraded` mode:

|

||||

- `mount -o degraded /dev/sdXX /mnt/point`

|

||||

- Copy the `lightningd.sqlite3` and `hsm_secret` to new media.

|

||||

- Do **not** write to the degraded `btrfs` mount!

|

||||

- Start up a `lightningd` using the `hsm_secret` and `lightningd.sqlite3` and close all channels and move all funds to onchain cold storage you control, then set up a new Lightning node.

|

||||

|

||||

If the device that fails is the USB flash disk, you can replace it using BTRFS commands.

|

||||

You should probably stop your Lightning node while doing this.

|

||||

|

||||

- `btrfs replace start /dev/sdOLD /dev/sdNEW ${BTRFSMOUNTPOINT}`.

|

||||

- If `/dev/sdOLD` no longer even exists because the device is really really broken, use `btrfs filesystem show` to see the number after `devid` of the broken device, and use that number instead of `/dev/sdOLD`.

|

||||

- Monitor status with `btrfs replace status ${BTRFSMOUNTPOINT}`.

|

||||

|

||||

More sophisticated setups with more than two devices are possible. Take note that "RAID 1" in `btrfs` means "data is copied on up to two devices", meaning only up to one device can fail.

|

||||

You may be interested in `raid1c3` and `raid1c4` modes if you have three or four storage devices. BTRFS would probably work better if you were purchasing an entire set

|

||||

of new storage devices to set up a new node.

|

||||

|

||||

## PostgreSQL Cluster

|

||||

|

||||

> 📘 Who should do this:

|

||||

>

|

||||

> Enterprise users, whales.

|

||||

|

||||

`lightningd` may also be compiled with PostgreSQL support.

|

||||

|

||||

PostgreSQL is generally faster than SQLITE3, and also supports running a PostgreSQL cluster to be used by `lightningd`, with automatic replication and failover in case an entire node of the PostgreSQL cluster fails.

|

||||

|

||||

Setting this up, however, is more involved.

|

||||

|

||||

By default, `lightningd` compiles with PostgreSQL support **only** if it finds `libpq` installed when you `./configure`. To enable it, you have to install a developer version of `libpq`. On most Debian-derived systems that would be `libpq-dev`. To verify you have it properly installed on your system, check if the following command gives you a path:

|

||||

|

||||

```shell

|

||||

pg_config --includedir

|

||||

```

|

||||

|

||||

|

||||

|

||||

Versioning may also matter to you.

|

||||

For example, Debian Stable ("buster") as of late 2020 provides PostgreSQL 11.9 for the `libpq-dev` package, but Ubuntu LTS ("focal") of 2020 provides PostgreSQL 12.5.

|

||||

Debian Testing ("bullseye") uses PostgreSQL 13.0 as of this writing. PostgreSQL 12 had a non-trivial change in the way the restore operation is done for replication.

|

||||

|

||||

You should use the same PostgreSQL version of `libpq-dev` as what you run on your cluster, which probably means running the same distribution on your cluster.

|

||||

|

||||

Once you have decided on a specific version you will use throughout, refer as well to the "synchronous replication" document of PostgreSQL for the **specific version** you are using:

|

||||

|

||||

- [PostgreSQL 11](https://www.postgresql.org/docs/11/runtime-config-replication.html)

|

||||

- [PostgreSQL 12](https://www.postgresql.org/docs/12/runtime-config-replication.html)

|

||||

- [PostgreSQL 13](https://www.postgresql.org/docs/13/runtime-config-replication.html)

|

||||

|

||||

You then have to compile `lightningd` with PostgreSQL support.

|

||||

|

||||

- Clone or untar a new source tree for `lightning` and `cd` into it.

|

||||

- You _could_ just use `make clean` on an existing one, but for the avoidance of doubt (and potential bugs in our `Makefile` cleanup rules), just create a fresh source tree.

|

||||

- `./configure`

|

||||

- Add any options to `configure` that you normally use as well.

|

||||

- Double-check the `config.vars` file contains `HAVE_POSTGRES=1`.

|

||||

- `grep 'HAVE_POSTGRES' config.vars`

|

||||

- `make`

|

||||

- If you install `lightningd`, `sudo make install`.

|

||||

|

||||

If you were not using PostgreSQL before but have compiled and used `lightningd` on your system, the resulting `lightningd` will still continue supporting and using your current SQLITE3 database; it just gains the option to use a PostgreSQL database as well.

|

||||

|

||||

If you just want to use PostgreSQL without using a cluster (for example, as an initial test without risking any significant funds), then after setting up a PostgreSQL database, you just need to add

|

||||

`--wallet=postgres://${USER}:${PASSWORD}@${HOST}:${PORT}/${DB}` to your `lightningd` config or invocation.

|

||||

|

||||

To set up a cluster for a brand new node, follow this (external) [guide by @gabridome](https://github.com/gabridome/docs/blob/master/c-lightning_with_postgresql_reliability.md)

|

||||

|

||||

The above guide assumes you are setting up a new node from scratch. It is also specific to PostgreSQL 12, and setting up for other versions **will** have differences; read the PostgreSQL manuals linked above.

|

||||

|

||||

> 🚧

|

||||

>

|

||||

> If you want to continue a node that started using an SQLITE3 database, note that we do not support this. You should set up a new PostgreSQL node, move funds from the SQLITE3 node to the PostgreSQL node, then shut down the SQLITE3 node permanently.

|

||||

|

||||

There are also more ways to set up PostgreSQL replication.

|

||||

In general, you should use [synchronous replication](https://www.postgresql.org/docs/13/warm-standby.html#SYNCHRONOUS-REPLICATION), since `lightningd` assumes that once a transaction is committed, it is saved in all permanent storage. This can be difficult to create remote replicas due to the latency.

|

||||

|

||||

## SQLite Litestream Replication

|

||||

|

||||

|

||||

|

||||

> 🚧

|

||||

>

|

||||

> Previous versions of this document recommended this technique, but we no longer do so.

|

||||

> According to [issue 4857](https://github.com/ElementsProject/lightning/issues/4857), even with a 60-second timeout that we added in 0.10.2, this leads to constant crashing of `lightningd` in some situations. This section will be removed completely six months after 0.10.3. Consider using `--wallet=sqlite3://${main}:${backup}` above instead.

|

||||

|

||||

One of the simpler things on any system is to use Litestream to replicate the SQLite database. It continuously streams SQLite changes to file or external storage - the cloud storage option should not be used.

|

||||

Backups/replication should not be on the same disk as the original SQLite DB.

|

||||

|

||||

You need to enable WAL mode on your database.

|

||||

To do so, first stop `lightningd`, then:

|

||||

|

||||

```shell

|

||||

$ sqlite3 lightningd.sqlite3

|

||||

sqlite3> PRAGMA journal_mode = WAL;

|

||||

sqlite3> .quit

|

||||

```

|

||||

|

||||

Then just restart `lightningd`.

|

||||

|

||||

/etc/litestream.yml :

|

||||

|

||||

```shell

|

||||

dbs:

|

||||

- path: /home/bitcoin/.lightning/bitcoin/lightningd.sqlite3

|

||||

replicas:

|

||||

- path: /media/storage/lightning_backup

|

||||

```

|

||||

|

||||

and start the service using systemctl:

|

||||

|

||||

```shell

|

||||

$ sudo systemctl start litestream

|

||||

```

|

||||

|

||||

Restore:

|

||||

|

||||

```shell

|

||||

$ litestream restore -o /media/storage/lightning_backup /home/bitcoin/restore_lightningd.sqlite3

|

||||

```

|

||||

|

||||

Because Litestream only copies small changes and not the entire database (holding a read lock on the file while doing so), the 60-second timeout on locking should not be reached unless something has made your backup medium very very slow.

|

||||

|

||||

Litestream has its own timer, so there is a tiny (but non-negligible) probability that `lightningd` updates the

|

||||

database, then irrevocably commits to the update by sending revocation keys to the counterparty, and _then_ your main storage media crashes before Litestream can replicate the update.

|

||||

|

||||

Treat this as a superior version of "Database File Backups" section below and prefer recovering via other backup methods first.

|

||||

|

||||

|

||||

|

||||

## Database File Backups

|

||||

|

||||

> 📘 Who should do this:

|

||||

>

|

||||

> Those who already have at least one of the other backup methods, those who are #reckless.

|

||||

|

||||

This is the least desirable backup strategy, as it _can_ lead to loss of all in-channel funds if you use it.

|

||||

However, having _no_ backup strategy at all _will_ lead to loss of all in-channel funds, so this is still better than nothing.

|

||||

|

||||

This backup method is undesirable, since it cannot recover the following channels:

|

||||

|

||||

- Channels with peers that do not support `option_dataloss_protect`.

|

||||

- Most nodes on the network already support `option_dataloss_protect` as of November 2020.

|

||||

- If the peer does not support `option_dataloss_protect`, then the entire channel funds will be revoked by the peer.

|

||||

- Peers can _claim_ to honestly support this, but later steal funds from you by giving obsolete state when you recover.

|

||||

- Channels created _after_ the copy was made are not recoverable.

|

||||

- Data for those channels does not exist in the backup, so your node cannot recover them.

|

||||

|

||||

Because of the above, this strategy is discouraged: you _can_ potentially lose all funds in open channels.

|

||||

|

||||

However, again, note that a "no backups #reckless" strategy leads to _definite_ loss of funds, so you should still prefer _this_ strategy rather than having _no_ backups at all.

|

||||

|

||||

Even if you have one of the better options above, you might still want to do this as a worst-case fallback, as long as you:

|

||||

|

||||

- Attempt to recover using the other backup options above first. Any one of them will be better than this backup option.

|

||||

- Recover by this method **ONLY** as a **_last_** resort.

|

||||

- Recover using the most recent backup you can find. Take time to look for the most recent available backup.

|

||||

|

||||

Again, this strategy can lead to only **_partial_** recovery of funds, or even to complete failure to recover, so use the other methods first to recover!

|

||||

|

||||

### Offline Backup

|

||||

|

||||

While `lightningd` is not running, just copy the `lightningd.sqlite3` file in the `$LIGHTNINGDIR` on backup media somewhere.

|

||||

|

||||

To recover, just copy the backed up `lightningd.sqlite3` into your new `$LIGHTNINGDIR` together with the `hsm_secret`.

|

||||

|

||||

You can also use any automated backup system as long as it includes the `lightningd.sqlite3` file (and optionally `hsm_secret`, but note that as a secret key, thieves getting a copy of your backups may allow them to steal your funds, even in-channel funds) and as long as it copies the file while `lightningd` is not running.

|

||||

|

||||

### Backing Up While `lightningd` Is Running

|

||||

|

||||

Since `sqlite3` will be writing to the file while `lightningd` is running, `cp`ing the `lightningd.sqlite3` file while `lightningd` is running may result in the file not being copied properly if `sqlite3` happens to be committing database transactions at that time, potentially leading to a corrupted backup file that cannot be recovered from.

|

||||

|

||||

You have to stop `lightningd` before copying the database to backup in order to ensure that backup files are not corrupted, and in particular, wait for the `lightningd` process to exit.

|

||||

Obviously, this is disruptive to node operations, so you might prefer to just perform the `cp` even if the backup potentially is corrupted. As long as you maintain multiple backups sampled at different times, this may be more acceptable than stopping and restarting `lightningd`; the corruption only exists in the backup, not in the original file.

|

||||

|

||||

If the filesystem or volume manager containing `$LIGHTNINGDIR` has a snapshot facility, you can take a snapshot of the filesystem, then mount the snapshot, copy `lightningd.sqlite3`, unmount the snapshot, and then delete the snapshot.

|

||||

Similarly, if the filesystem supports a "reflink" feature, such as `cp -c` on an APFS on MacOS, or `cp --reflink=always` on an XFS or BTRFS on Linux, you can also use that, then copy the reflinked copy to a different storage medium; this is equivalent to a snapshot of a single file.

|

||||

This _reduces_ but does not _eliminate_ this race condition, so you should still maintain multiple backups.

|

||||

|

||||

You can additionally perform a check of the backup by this command:

|

||||

|

||||

```shell

|

||||

echo 'PRAGMA integrity_check;' | sqlite3 ${BACKUPFILE}

|

||||

```

|

||||

|

||||

|

||||

|

||||

This will result in the string `ok` being printed if the backup is **likely** not corrupted.

|

||||

If the result is anything else than `ok`, the backup is definitely corrupted and you should make another copy.

|

||||

|

||||

In order to make a proper uncorrupted backup of the SQLITE3 file while `lightningd` is running, we would need to have `lightningd` perform the backup itself, which, as of the version at the time of this writing, is not yet implemented.

|

||||

|

||||

Even if the backup is not corrupted, take note that this backup strategy should still be a last resort; recovery of all funds is still not assured with this backup strategy.

|

||||

|

||||

`sqlite3` has `.dump` and `VACUUM INTO` commands, but note that those lock the main database for long time periods, which will negatively affect your `lightningd` instance.

|

||||

76

doc/guides/Beginner-s Guide/beginners-guide.md

Normal file

76

doc/guides/Beginner-s Guide/beginners-guide.md

Normal file

|

|

@ -0,0 +1,76 @@

|

|||

---

|

||||

title: "Running your node"

|

||||

slug: "beginners-guide"

|

||||

excerpt: "A guide to all the basics you need to get up and running immediately."

|

||||

hidden: false

|

||||

createdAt: "2022-11-18T14:27:50.098Z"

|

||||

updatedAt: "2023-02-21T13:49:20.132Z"

|

||||

---

|

||||

## Starting `lightningd`

|

||||

|

||||

#### Regtest (local, fast-start) option

|

||||

|

||||

If you want to experiment with `lightningd`, there's a script to set up a `bitcoind` regtest test network of two local lightning nodes, which provides a convenient `start_ln` helper. See the notes at the top of the `startup_regtest.sh` file for details on how to use it.

|

||||

|

||||

```bash

|

||||

. contrib/startup_regtest.sh

|

||||

```

|

||||

|

||||

|

||||

|

||||

Note that your local nodeset will be much faster/more responsive if you've configured your node to expose the developer options, e.g.

|

||||

|

||||

```bash

|

||||

./configure --enable-developer

|

||||

```

|

||||

|

||||

|

||||

|

||||

#### Mainnet Option

|

||||

|

||||

To test with real bitcoin, you will need to have a local `bitcoind` node running:

|

||||

|

||||

```bash

|

||||

bitcoind -daemon

|

||||

```

|

||||

|

||||

|

||||

|

||||

Wait until `bitcoind` has synchronized with the network.

|

||||

|

||||

Make sure that you do not have `walletbroadcast=0` in your `~/.bitcoin/bitcoin.conf`, or you may run into trouble.

|

||||

Notice that running `lightningd` against a pruned node may cause some issues if not managed carefully, see [pruning](doc:bitcoin-core##using-a-pruned-bitcoin-core-node) for more information.

|

||||

|

||||

You can start `lightningd` with the following command:

|

||||

|

||||

```bash

|

||||

lightningd --network=bitcoin --log-level=debug

|

||||

```

|

||||

|

||||

|

||||

|

||||

This creates a `.lightning/` subdirectory in your home directory: see `man -l doc/lightningd.8` (or [???](???)) for more runtime options.

|

||||

|

||||

## Using The JSON-RPC Interface

|

||||

|

||||

Core Lightning exposes a [JSON-RPC 2.0](https://www.jsonrpc.org/specification) interface over a Unix Domain socket; the [`lightning-cli`](ref:lightning-cli) tool can be used to access it, or there is a [python client library](???).

|

||||

|

||||

You can use `[lightning-cli](ref:lightning-cli) help` to print a table of RPC methods; `[lightning-cli](lightning-cli) help <command>` will offer specific information on that command.

|

||||

|

||||

Useful commands:

|

||||

|

||||

- [lightning-newaddr](ref:lightning-newaddr): get a bitcoin address to deposit funds into your lightning node.

|

||||

- [lightning-listfunds](ref:lightning-listfunds): see where your funds are.

|

||||

- [lightning-connect](ref:lightning-connect): connect to another lightning node.

|

||||

- [lightning-fundchannel](ref:lightning-fundchannel): create a channel to another connected node.

|

||||

- [lightning-invoice](ref:lightning-invoice): create an invoice to get paid by another node.

|

||||

- [lightning-pay](ref:lightning-pay): pay someone else's invoice.

|

||||

- [lightning-plugin](ref:lightning-plugin): commands to control extensions.

|

||||

|

||||

## Care And Feeding Of Your New Lightning Node

|

||||

|

||||

Once you've started for the first time, there's a script called `contrib/bootstrap-node.sh` which will connect you to other nodes on the lightning network.

|

||||

|

||||

There are also numerous plugins available for Core Lightning which add capabilities: see the [Plugins](doc:plugins) guide, and check out the plugin collection at: <https://github.com/lightningd/plugins>, including [helpme](https://github.com/lightningd/plugins/tree/master/helpme) which guides you through setting up your first channels and customising your node.

|

||||

|

||||

For a less reckless experience, you can encrypt the HD wallet seed: see [HD wallet encryption](doc:securing-keys).

|

||||

50

doc/guides/Beginner-s Guide/opening-channels.md

Normal file

50

doc/guides/Beginner-s Guide/opening-channels.md

Normal file

|

|

@ -0,0 +1,50 @@

|

|||

---

|

||||

title: "Opening channels"

|

||||

slug: "opening-channels"

|

||||

hidden: false

|

||||

createdAt: "2022-11-18T16:26:57.798Z"

|

||||

updatedAt: "2023-01-31T15:07:08.196Z"

|

||||

---

|

||||

First you need to transfer some funds to `lightningd` so that it can open a channel:

|

||||

|

||||

```shell

|

||||

# Returns an address <address>

|

||||

lightning-cli newaddr

|

||||

```

|

||||

|

||||

|

||||

|

||||

`lightningd` will register the funds once the transaction is confirmed.

|

||||

|

||||

You may need to generate a p2sh-segwit address if the faucet does not support bech32:

|

||||

|

||||

```shell

|

||||

# Return a p2sh-segwit address

|

||||

lightning-cli newaddr p2sh-segwit

|

||||

```

|

||||

|

||||

|

||||

|

||||

Confirm `lightningd` got funds by:

|

||||

|

||||

```shell

|

||||

# Returns an array of on-chain funds.

|

||||

lightning-cli listfunds

|

||||

```

|

||||

|

||||

|

||||

|

||||

Once `lightningd` has funds, we can connect to a node and open a channel. Let's assume the **remote** node is accepting connections at `<ip>` (and optional `<port>`, if not 9735) and has the node ID `<node_id>`:

|

||||

|

||||

```shell

|

||||

lightning-cli connect <node_id> <ip> [<port>]

|

||||

lightning-cli fundchannel <node_id> <amount_in_satoshis>

|

||||

```

|

||||

|

||||

|

||||

|

||||

This opens a connection and, on top of that connection, then opens a channel.

|

||||

|

||||

The funding transaction needs 3 confirmations in order for the channel to be usable, and 6 to be announced for others to use.

|

||||

|

||||

You can check the status of the channel using `lightning-cli listpeers`, which after 3 confirmations (1 on testnet) should say that `state` is `CHANNELD_NORMAL`; after 6 confirmations you can use `lightning-cli listchannels` to verify that the `public` field is now `true`.

|

||||

10

doc/guides/Beginner-s Guide/securing-keys.md

Normal file

10

doc/guides/Beginner-s Guide/securing-keys.md

Normal file

|

|

@ -0,0 +1,10 @@

|

|||

---

|

||||

title: "Securing keys"

|

||||

slug: "securing-keys"

|

||||

hidden: false

|

||||

createdAt: "2022-11-18T16:28:08.529Z"

|

||||

updatedAt: "2023-01-31T13:52:27.300Z"

|

||||

---

|

||||

You can encrypt the `hsm_secret` content (which is used to derive the HD wallet's master key) by passing the `--encrypted-hsm` startup argument, or by using the `hsmtool` (which you can find in the `tool/` directory at the root of [Core Lightning repository](https://github.com/ElementsProject/lightning)) with the `encrypt` method. You can unencrypt an encrypted `hsm_secret` using the `hsmtool` with the `decrypt` method.

|

||||

|

||||

If you encrypt your `hsm_secret`, you will have to pass the `--encrypted-hsm` startup option to `lightningd`. Once your `hsm_secret` is encrypted, you **will not** be able to access your funds without your password, so please beware with your password management. Also, beware of not feeling too safe with an encrypted `hsm_secret`: unlike for `bitcoind` where the wallet encryption can restrict the usage of some RPC command, `lightningd` always needs to access keys from the wallet which is thus **not locked** (yet), even with an encrypted BIP32 master seed.

|

||||

|

|

@ -0,0 +1,24 @@

|

|||

---

|

||||

title: "Sending and receiving payments"

|

||||

slug: "sending-and-receiving-payments"

|

||||

hidden: false

|

||||

createdAt: "2022-11-18T16:27:07.625Z"

|

||||

updatedAt: "2023-01-31T15:06:02.214Z"

|

||||

---

|

||||

Payments in Lightning are invoice based.

|

||||

|

||||

The recipient creates an invoice with the expected `<amount>` in millisatoshi (or `"any"` for a donation), a unique `<label>` and a `<description>` the payer will see:

|

||||

|

||||

```shell

|

||||

lightning-cli invoice <amount> <label> <description>

|

||||

```

|

||||

|

||||

This returns some internal details, and a standard invoice string called `bolt11` (named after the [BOLT #11 lightning spec](https://github.com/lightning/bolts/blob/master/11-payment-encoding.md)).

|

||||

|

||||

The sender can feed this `bolt11` string to the `decodepay` command to see what it is, and pay it simply using the `pay` command:

|

||||

|

||||

```shell

|

||||

lightning-cli pay <bolt11>

|

||||

```

|

||||

|

||||

Note that there are lower-level interfaces (and more options to these interfaces) for more sophisticated use.

|

||||

13

doc/guides/Beginner-s Guide/watchtowers.md

Normal file

13

doc/guides/Beginner-s Guide/watchtowers.md

Normal file

|

|

@ -0,0 +1,13 @@

|

|||

---

|

||||

title: "Watchtowers"

|

||||

slug: "watchtowers"

|

||||

excerpt: "Defend your node against breaches using a watchtower."

|

||||

hidden: false

|

||||

createdAt: "2022-11-18T16:28:27.054Z"

|

||||

updatedAt: "2023-02-02T07:13:57.111Z"

|

||||

---

|

||||

The Lightning Network protocol assumes that a node is always online and synchronised with the network. Should your lightning node go offline for some time, it is possible that a node on the other side of your channel may attempt to force close the channel with an outdated state (also known as revoked commitment). This may allow them to steal funds from the channel that belonged to you.

|

||||

|

||||

A watchtower is a third-party service that you can hire to defend your node against such breaches, whether malicious or accidental, in the event that your node goes offline. It will watch for breaches on the blockchain and punish the malicious peer by relaying a penalty transaction on your behalf.

|

||||

|

||||

There are a number of watchtower services available today. One of them is the [watchtower client plugin](https://github.com/talaia-labs/rust-teos/tree/master/watchtower-plugin) that works with the [Eye of Satoshi tower](https://github.com/talaia-labs/rust-teos) (or any [BOLT13](https://github.com/sr-gi/bolt13/blob/master/13-watchtowers.md) compliant watchtower).

|

||||

74

doc/guides/Contribute to Core Lightning/code-generation.md

Normal file

74

doc/guides/Contribute to Core Lightning/code-generation.md

Normal file

|

|

@ -0,0 +1,74 @@

|

|||

---

|

||||

title: "Code Generation"

|

||||

slug: "code-generation"

|

||||

hidden: true

|

||||

createdAt: "2023-04-22T12:29:01.116Z"

|

||||

updatedAt: "2023-04-22T12:44:47.814Z"

|

||||

---

|

||||

The CLN project has a multitude of interfaces, most of which are generated from an abstract schema:

|

||||

|

||||

- Wire format for peer-to-peer communication: this is the binary format that is specific by the [LN spec](https://github.com/lightning/bolts). It uses the [generate-wire.py](https://github.com/ElementsProject/lightning/blob/master/tools/generate-wire.py) script to parse the (faux) CSV files that are automatically extracted from the specification and writes C source code files that are then used internally to encode and decode messages, as well as provide print functions for the messages.

|

||||

|

||||

- Wire format for inter-daemon communication: CLN follows a multi-daemon architecture, making communication explicit across daemons. For this inter-daemon communication we use a slightly altered message format from the [LN spec](https://github.com/lightning/bolts). The changes are

|

||||

1. addition of FD passing semantics to allow establishing a new connection between daemons (communication uses [socketpair](https://man7.org/linux/man-pages/man2/socketpair.2.html), so no `connect`)

|

||||

2. change the message length prefix from `u16` to `u32`, allowing for messages larger than 65Kb. The CSV files are with the respective sub-daemon and also use [generate-wire.py](https://github.com/ElementsProject/lightning/blob/master/tools/generate-wire.py) to generate encoding, decoding and printing functions

|

||||

|

||||

- We describe the JSON-RPC using [JSON Schema](https://json-schema.org/) in the [`doc/schemas`](https://github.com/ElementsProject/lightning/tree/master/doc/schemas) directory. Each method has a `.request.json` for the request message, and a `.schema.json` for the response (the mismatch is historical and will eventually be addressed). During tests the `pytest` target will verify responses, however the JSON-RPC methods are _not_ generated (yet?). We do generate various client stubs for languages, using the `msggen`][msggen] tool. More on the generated stubs and utilities below.

|

||||

|

||||

## Man pages

|

||||

|

||||

The manpages are partially generated from the JSON schemas using the [`fromschema`](https://github.com/ElementsProject/lightning/blob/master/tools/fromschema.py) tool. It reads the request schema and fills in the manpage between two markers:

|

||||

|

||||

```markdown

|

||||

[comment]: # (GENERATE-FROM-SCHEMA-START)

|

||||

...

|

||||

[comment]: # (GENERATE-FROM-SCHEMA-END)

|

||||

```

|

||||

|

||||

|

||||

|

||||

> 📘

|

||||

>

|

||||

> Some of this functionality overlaps with [`msggen`](https://github.com/ElementsProject/lightning/tree/master/contrib/msggen) (parsing the Schemas) and [blockreplace.py](https://github.com/ElementsProject/lightning/blob/master/devtools/blockreplace.py) (filling in the template). It is likely that this will eventually be merged.

|

||||

|

||||

## `msggen`

|

||||

|

||||

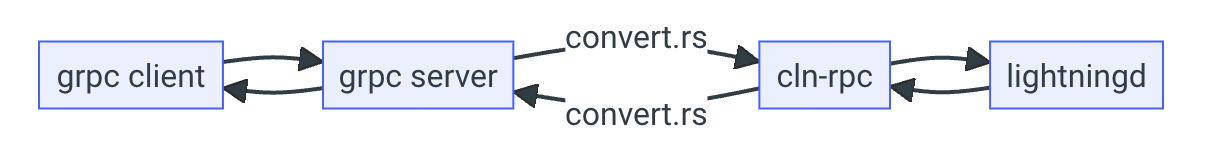

`msggen` is used to generate JSON-RPC client stubs, and converters between in-memory formats and the JSON format. In addition, by chaining some of these we can expose a [grpc](https://grpc.io/) interface that matches the JSON-RPC interface. This conversion chain is implemented in the [grpc-plugin](https://github.com/ElementsProject/lightning/tree/master/plugins/grpc-plugin).

|

||||

|

||||

[block:image]

|

||||

{

|

||||

"images": [

|

||||

{

|

||||

"image": [

|

||||

"https://files.readme.io/8777cc4-image.png",

|

||||

null,

|

||||

null

|

||||

],

|

||||

"align": "center",

|

||||

"caption": "Artifacts generated from the JSON Schemas using `msggen`"

|

||||

}

|

||||

]

|

||||

}

|

||||

[/block]

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### `cln-rpc`

|

||||

|

||||

We use `msggen` to generate the Rust bindings crate [`cln-rpc`](https://github.com/ElementsProject/lightning/tree/master/cln-rpc). These bindings contain the stubs for the JSON-RPC methods, as well as types for the request and response structs. The [generator code](https://github.com/ElementsProject/lightning/blob/master/contrib/msggen/msggen/gen/rust.py) maps each abstract JSON-RPC type to a Rust type, minimizing size (e.g., binary data is hex-decoded).

|

||||

|

||||

The calling pattern follows the `call(req_obj) -> resp_obj` format, and the individual arguments are not expanded. For more ergonomic handling of generic requests and responses we also define the `Request` and `Response` enumerations, so you can hand them to a generic function without having to resort to dynamic dispatch.

|

||||

|

||||

The remainder of the crate implements an async/await JSON-RPC client, that can deal with the Unix Domain Socket [transport](ref:lightningd-rpc) used by CLN.

|

||||

|

||||

### `cln-grpc`

|

||||

|

||||

The `cln-grpc` crate is mostly used to provide the primitives to build the `grpc-plugin`. As mentioned above, the grpc functionality relies on a chain of generated parts:

|

||||

|

||||

- First `msggen` is used to generate the [protobuf file](https://github.com/ElementsProject/lightning/blob/master/cln-grpc/proto/node.proto), containing the service definition with the method stubs, and the types referenced by those stubs.

|

||||

- Next it generates the `convert.rs` file which is used to convert the structs for in-memory representation from `cln-rpc` into the corresponding protobuf structs.

|

||||

- Finally `msggen` generates the `server.rs` file which can be bound to a grpc endpoint listening for incoming grpc requests, and it will convert the request and forward it to the JSON-RPC. Upon receiving the response it gets converted back into a grpc response and sent back.

|

||||

|

||||

|

||||

|

|

@ -0,0 +1,183 @@

|

|||

---

|

||||

title: "Coding Style Guidelines"

|

||||

slug: "coding-style-guidelines"

|

||||

hidden: false

|

||||

createdAt: "2023-01-25T05:34:10.822Z"

|

||||

updatedAt: "2023-01-25T05:50:05.437Z"

|

||||

---

|

||||

Style is an individualistic thing, but working on software is group activity, so consistency is important. Generally our coding style is similar to the [Linux coding style](https://www.kernel.org/doc/html/v4.10/process/coding-style.html).

|

||||

|

||||

## Communication

|

||||

|

||||

We communicate with each other via code; we polish each others code, and give nuanced feedback. Exceptions to the rules below always exist: accept them. Particularly if they're funny!

|

||||

|

||||

## Prefer Short Names

|

||||

|

||||

`num_foos` is better than `number_of_foos`, and `i` is better than`counter`. But `bool found;` is better than `bool ret;`. Be as short as you can but still descriptive.

|

||||

|

||||

## Prefer 80 Columns

|

||||

|

||||

We have to stop somewhere. The two tools here are extracting deeply-indented code into their own functions, and use of short-cuts using early returns or continues, eg:

|

||||

|

||||

```c

|

||||

for (i = start; i != end; i++) {

|

||||

if (i->something)

|

||||

continue;

|

||||

|

||||

if (!i->something_else)

|

||||

continue;

|

||||

|

||||

do_something(i);

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

|

||||

## Tabs and indentaion

|

||||

|

||||

The C code uses TAB charaters with a visual indentation of 8 whitespaces.

|

||||

If you submit code for a review, make sure your editor knows this.

|

||||

|

||||

When breaking a line with more than 80 characters, align parameters and arguments like so:

|

||||

|

||||

```c

|

||||

static void subtract_received_htlcs(const struct channel *channel,

|

||||

struct amount_msat *amount)

|

||||

```

|

||||

|

||||

|

||||

|

||||

Note: For more details, the files `.clang-format` and `.editorconfig` are located in the projects root directory.

|

||||

|

||||

## Prefer Simple Statements

|

||||

|

||||

Notice the statement above uses separate tests, rather than combining them. We prefer to only combine conditionals which are fundamentally related, eg:

|

||||

|

||||

```c

|

||||

if (i->something != NULL && *i->something < 100)

|

||||

```

|

||||

|

||||

|

||||

|

||||

## Use of `take()`

|

||||

|

||||

Some functions have parameters marked with `TAKES`, indicating that they can take lifetime ownership of a parameter which is passed using `take()`. This can be a useful optimization which allows the function to avoid making a copy, but if you hand `take(foo)` to something which doesn't support `take()` you'll probably leak memory!

|

||||

|

||||

In particular, our automatically generated marshalling code doesn't support `take()`.

|

||||

|

||||

If you're allocating something simply to hand it via `take()` you should use NULL as the parent for clarity, eg:

|

||||

|

||||

```c

|

||||

msg = towire_shutdown(NULL, &peer->channel_id, peer->final_scriptpubkey);

|

||||

enqueue_peer_msg(peer, take(msg));

|

||||

```

|

||||

|

||||

|

||||

|

||||

## Use of `tmpctx`

|

||||

|

||||

There's a convenient temporary context which gets cleaned regularly: you should use this for throwaways rather than (as you'll see some of our older code do!) grabbing some passing object to hang your temporaries off!

|

||||

|

||||

## Enums and Switch Statements

|

||||

|

||||

If you handle various enumerated values in a `switch`, don't use `default:` but instead mention every enumeration case-by-case. That way when a new enumeration case is added, most compilers will warn that you don't cover it. This is particularly valuable for code auto-generated from the specification!

|

||||

|

||||

## Initialization of Variables

|

||||

|

||||

Avoid double-initialization of variables; it's better to set them when they're known, eg:

|

||||

|

||||

```c

|

||||

bool is_foo;

|

||||

|

||||

if (bar == foo)

|

||||

is_foo = true;

|

||||

else

|

||||

is_foo = false;

|

||||

|

||||

...

|

||||

if (is_foo)...

|

||||

```

|

||||

|

||||

|

||||

|

||||

This way the compiler will warn you if you have one path which doesn't set the variable. If you initialize with `bool is_foo = false;` then you'll simply get that value without warning when you change the code and forget to set it on one path.

|

||||

|

||||

## Initialization of Memory

|

||||

|

||||

`valgrind` warns about decisions made on uninitialized memory. Prefer `tal` and `tal_arr` to `talz` and `tal_arrz` for this reason, and initialize only the fields you expect to be used.

|

||||

|

||||

Similarly, you can use `memcheck(mem, len)` to explicitly assert that memory should have been initialized, rather than having valgrind trigger later. We use this when placing things on queues, for example.

|

||||

|

||||

## Use of static and const

|

||||

|

||||

Everything should be declared static and const by default. Note that `tal_free()` can free a const pointer (also, that it returns `NULL`, for convenience).

|

||||

|

||||

## Typesafety Is Worth Some Pain

|

||||

|

||||

If code is typesafe, refactoring is as simple as changing a type and compiling to find where to refactor. We rely on this, so most places in the code will break if you hand the wrong type, eg

|

||||

`type_to_string` and `structeq`.

|

||||

|

||||

The two tools we have to help us are complicated macros in `ccan/typesafe_cb` allow you to create callbacks which must match the type of their argument, rather than using `void *`. The other is `ARRAY_SIZE`, a macro which won't compile if you hand it a pointer instead of an actual array.

|

||||

|

||||

## Use of `FIXME`

|

||||

|

||||

There are two cases in which you should use a `/* FIXME: */` comment: one is where an optimization is possible but it's not clear that it's yet worthwhile, and the second one is to note an ugly corner case which could be improved (and may be in a following patch).

|

||||

|

||||

There are always compromises in code: eventually it needs to ship. `FIXME` is `grep`-fodder for yourself and others, as well as useful warning signs if we later encounter an issue in some part of the code.

|

||||

|

||||

## If You Don't Know The Right Thing, Do The Simplest Thing

|

||||

|

||||

Sometimes the right way is unclear, so it's best not to spend time on it. It's far easier to rewrite simple code than complex code, too.

|

||||

|

||||

## Write For Today: Unused Code Is Buggy Code

|

||||

|

||||

Don't overdesign: complexity is a killer. If you need a fancy data structure, start with a brute force linked list. Once that's working, perhaps consider your fancy structure, but don't implement a generic thing. Use `/* FIXME: ...*/` to salve your conscience.

|

||||

|

||||

## Keep Your Patches Reviewable

|

||||

|

||||

Try to make a single change at a time. It's tempting to do "drive-by" fixes as you see other things, and a minimal amount is unavoidable, but you can end up shaving infinite yaks. This is a good time to drop a `/* FIXME: ...*/` comment and move on.

|

||||

|

||||

## Creating JSON APIs

|

||||

|

||||

Our JSON RPCs always return a top-level object. This allows us to add warnings (e.g. that we're still starting up) or other optional fields later.

|

||||

|

||||

Prefer to use JSON names which are already in use, or otherwise names from the BOLT specifications.

|

||||

|

||||

The same command should always return the same JSON format: this is why e.g. `listchannels` return an array even if given an argument so there's only zero or one entries.

|

||||

|

||||

All `warning` fields should have unique names which start with `warning_`, the value of which should be an explanation. This allows for programs to deal with them sanely, and also perform translations.

|

||||

|

||||

### Documenting JSON APIs

|

||||

|

||||

We use JSON schemas to validate that JSON-RPC returns are in the correct form, and also to generate documentation. See [Writing JSON Schemas](doc:writing-json-schemas).

|

||||

|

||||

## Changing JSON APIs

|

||||

|

||||

All JSON API changes need a Changelog line (see below).

|

||||

|

||||

You can always add a new output JSON field (Changelog-Added), but you cannot remove one without going through a 6-month deprecation cycle (Changelog-Deprecated)

|

||||

|

||||

So, only output it if `allow-deprecated-apis` is true, so users can test their code is futureproof. In 6 months remove it (Changelog-Removed).

|

||||

|

||||

Changing existing input parameters is harder, and should generally be avoided. Adding input parameters is possible, but should be done cautiously as too many parameters get unwieldy quickly.

|

||||

|

||||

## Github Workflows

|

||||

|

||||

We have adopted a number of workflows to facilitate the development of Core Lightning, and to make things more pleasant for contributors.

|

||||

|

||||

### Changelog Entries in Commit Messages

|

||||

|

||||

We are maintaining a changelog in the top-level directory of this project. However since every pull request has a tendency to touch the file and therefore create merge-conflicts we decided to derive the changelog file from the pull requests that were added between releases. In order for a pull request to show up in the changelog at least one of its commits will have to have a line with one of the following prefixes:

|

||||

|

||||

- `Changelog-Added: ` if the pull request adds a new feature

|

||||

- `Changelog-Changed: ` if a feature has been modified and might require changes on the user side

|

||||

- `Changelog-Deprecated: ` if a feature has been marked for deprecation, but not yet removed

|

||||

- `Changelog-Fixed: ` if a bug has been fixed

|

||||

- `Changelog-Removed: ` if a (previously deprecated) feature has been removed

|

||||

- `Changelog-Experimental: ` if it only affects --enable-experimental-features builds, or experimental- config options.

|

||||

|

||||

In case you think the pull request is small enough not to require a changelog entry please use `Changelog-None` in one of the commit messages to opt out.

|

||||

|

||||

Under some circumstances a feature may be removed even without deprecation warning if it was not part of a released version yet, or the removal is urgent.

|

||||

|

||||

In order to ensure that each pull request has the required `Changelog-*:` line for the changelog our trusty @bitcoin-bot will check logs whenever a pull request is created or updated and search for the required line. If there is no such line it'll mark the pull request as `pending` to call out the need for an entry.

|

||||

|

|

@ -0,0 +1,64 @@

|

|||

---

|

||||

title: "Writing JSON Schemas"

|

||||

slug: "writing-json-schemas"

|

||||

hidden: false

|

||||

createdAt: "2023-01-25T05:46:43.718Z"

|

||||

updatedAt: "2023-01-30T15:36:28.523Z"

|

||||

---

|

||||

A JSON Schema is a JSON file which defines what a structure should look like; in our case we use it in our testsuite to check that they match command responses, and also use it to generate our documentation.

|

||||

|

||||

Yes, schemas are horrible to write, but they're damn useful. We can only use a subset of the full [JSON Schema Specification](https://json-schema.org/), but if you find that limiting it's probably a sign that you should simplify your JSON output.

|

||||

|

||||

## Updating a Schema

|

||||

|

||||

If you add a field, you should add it to the schema, and you must add "added": "VERSION" (where VERSION is the next release version!).

|

||||

|

||||

Similarly, if you deprecate a field, add "deprecated": "VERSION" (where VERSION is the next release version). They will be removed two versions later.

|

||||

|

||||

## How to Write a Schema

|

||||

|

||||

Name the schema doc/schemas/`command`.schema.json: the testsuite should pick it up and check all invocations of that command against it.

|

||||

|

||||

I recommend copying an existing one to start.

|

||||

|

||||

You will need to put the magic lines in the manual page so `make doc-all` will fill it in for you:

|

||||

|

||||

```json

|

||||

[comment]: # (GENERATE-FROM-SCHEMA-START)

|

||||

[comment]: # (GENERATE-FROM-SCHEMA-END)

|

||||

```

|

||||

|

||||

|

||||

|

||||

If something goes wrong, try tools/fromscheme.py doc/schemas/`command`.schema.json to see how far it got before it died.

|

||||

|

||||

You should always use `"additionalProperties": false`, otherwise your schema might not be covering everything. Deprecated fields simply have `"deprecated": true` in their properties, so they are allowed by omitted from the documentation.

|

||||

|

||||

You should always list all fields which are _always_ present in `"required"`.

|

||||

|

||||

We extend the basic types; see [fixtures.py](https://github.com/ElementsProject/lightning/tree/master/contrib/pyln-testing/pyln/testing/fixtures.py).

|

||||

|

||||

In addition, before committing a new schema or a new version of it, make sure that it is well formatted. If you don't want do it by hand, use `make fmt-schema` that uses jq under the hood.

|

||||

|

||||

### Using Conditional Fields

|

||||

|

||||

Sometimes one field is only sometimes present; if you can, you should make the schema know when it should (and should not!) be there.

|

||||

|

||||

There are two kinds of conditional fields expressable: fields which are only present if another field is present, or fields only present if another field has certain values.

|

||||

|

||||

To add conditional fields:

|

||||

|

||||

1. Do _not_ mention them in the main "properties" section.

|

||||

2. Set `"additionalProperties": true` for the main "properties" section.

|

||||

3. Add an `"allOf": [` array at the same height as `"properties"'`. Inside this place one `if`/`then` for each conditional field.

|

||||

4. If a field simply requires another field to be present, use the pattern `"required": [ "field" ]` inside the "if".

|

||||

5. If a field requires another field value, use the pattern

|

||||

`"properties": { "field": { "enum": [ "val1", "val2" ] } }` inside the "if".

|

||||

6. Inside the "then", use `"additionalProperties": false` and place empty `{}` for all the other possible properties.

|

||||

7. If you haven't covered all the possibilties with `if` statements, add an `else` with `"additionalProperties": false` which simply mentions every allowable property. This ensures that the fields can _only_ be present when conditions are met.

|

||||

|

||||

### JSON Drinking Game!

|

||||

|

||||

1. Sip whenever you have an additional comma at the end of a sequence.

|

||||

2. Sip whenever you omit a comma in a sequence because you cut & paste.

|

||||

3. Skull whenever you wish JSON had comments.

|

||||

|

|

@ -0,0 +1,237 @@

|

|||

---

|

||||

title: "CLN Architecture"

|

||||

slug: "contribute-to-core-lightning"

|

||||

excerpt: "Familiarise yourself with the core components of Core Lightning."

|

||||

hidden: false

|

||||

createdAt: "2022-11-18T14:28:33.564Z"

|

||||

updatedAt: "2023-02-21T15:12:37.888Z"

|

||||

---

|

||||

The Core Lightning project implements the lightning protocol as specified in [various BOLTs](https://github.com/lightning/bolts). It's broken into subdaemons, with the idea being that we can add more layers of separation between different clients and extra barriers to exploits.

|

||||

|

||||

To read the code, you should start from [lightningd.c](https://github.com/ElementsProject/lightning/blob/master/lightningd/lightningd.c) and hop your way through the '~' comments at the head of each daemon in the suggested order.

|

||||

|

||||